1) What is the limit of F(n) when n is large, where F(n) is the n-th term in the Fibonacci series?

Ans:

The ratio between consecutive terms, x, approaches the golden ratio phi. Proof: we posit that

F(n) = x^n * F(0) = x^(n-1) * F(0) + x^(n-2) * F(0)

x^n = x^(n-1) + x^(n-2)

x = 0.5 * (1 +- sqrt(5))

The two values are phi and 1/phi, respective.

2) Write functions to compute F(n) and comment on the order of the algorithm.

Ans:

Recursive approach -

int Fib(int n){

if (n = 0) {Fib = 0}

else if (n = 1) {Fib = 1}

else {

Fib = Fib(n-1) + Fib(n-2)

}

return Fib;

}

Order ~ O(phi^n)

Note: alternatively we can store the intermediate values in a cache to reduce redundancy (for example to find F(4) we have to calculate F(2) = F(3-1) and F(2) = F(4-2) twice, which is a waste of time).

Bottom-up approach -

int Fib(int n){

temp1 = 0;

temp2 = 1;

if (n = 0) {Fib = 0}

if (n = 1) {Fib = 1}

for (int i = 0; i <= n; i++){

run_sum = temp1 + temp2;

temp1 = temp2;

temp2 = run_sum;

}

return run_sum;

}

Order ~ O(n)

See here for more interview questions/brainteasers

Friday, June 24, 2011

Tuesday, June 21, 2011

Quant Interview Questions (Equity Exotic Derivatives)

1) What is the Taylor expansion of exp(-1/x^2)?

Ans:

This function cannot be expanded at x = 0 because there is a singularity: the function is NOT analytic at x = 0.

http://en.wikipedia.org/wiki/Taylor_series#Analytic_functions

2) Suppose we have the prices of options with various strikes. How can we price an option with arbitrary payoff?

Ans:

Solve the Fokker-Planck Equation to obtain the risk-neutral probability density p(S). Then the price of an option with arbitrary payoff can be calculated as

exp(-r (T-t)) \int Payoff(S) p(S) dS

Note: In case of vanilla European call, p(S) can be found by the second partial derivative of the call price w.r.t. strike K

3) Suppose we have a volatility smile (same expiry, different strikes). What no-arbitrage condition can be imposed on the option implied volatilities (i.e. prices)?

Ans:

Recall the bull spread relation and the butterfly spread relation. In the continuous limit, they read

0 < -dC/dK < 1 [Note the negative sign]

d^2C/dK^2 > 0

4) Given two stock price time series of two stocks. After computing the correlation using the naive approach (rolling covariance divided by rolling standard deviations), the correlation matrix is NOT PSD. How can this be fixed?

Ans:

??

See here for more interview questions/brainteasers

Ans:

This function cannot be expanded at x = 0 because there is a singularity: the function is NOT analytic at x = 0.

http://en.wikipedia.org/wiki/Taylor_series#Analytic_functions

2) Suppose we have the prices of options with various strikes. How can we price an option with arbitrary payoff?

Ans:

Solve the Fokker-Planck Equation to obtain the risk-neutral probability density p(S). Then the price of an option with arbitrary payoff can be calculated as

exp(-r (T-t)) \int Payoff(S) p(S) dS

Note: In case of vanilla European call, p(S) can be found by the second partial derivative of the call price w.r.t. strike K

3) Suppose we have a volatility smile (same expiry, different strikes). What no-arbitrage condition can be imposed on the option implied volatilities (i.e. prices)?

Ans:

Recall the bull spread relation and the butterfly spread relation. In the continuous limit, they read

0 < -dC/dK < 1 [Note the negative sign]

d^2C/dK^2 > 0

4) Given two stock price time series of two stocks. After computing the correlation using the naive approach (rolling covariance divided by rolling standard deviations), the correlation matrix is NOT PSD. How can this be fixed?

Ans:

??

See here for more interview questions/brainteasers

Sunday, June 12, 2011

Barrier and Asian Options

In hedging barrier and Asian options, we do not need an extra asset in addition to the underlying stock. That is to say, although the payoff and value of the barrier (Asian) option does depend on the running extremum (average) process, such a process does not introduce an extra source of uncertainty (however they do introduce an extra dimension in numerical pricing).

- For barrier option, the running extremum can be handled using reflection principle.

- For Asian option, the running average can be handled by having an auxiliary process. Once again, no additional source of uncertainty is introduced by doing this.

See Shreve.

- For barrier option, the running extremum can be handled using reflection principle.

- For Asian option, the running average can be handled by having an auxiliary process. Once again, no additional source of uncertainty is introduced by doing this.

See Shreve.

Friday, June 10, 2011

C++ Advanced Concepts

1) Initialization List

http://www.learncpp.com/cpp-tutorial/101-constructor-initialization-lists/

Also, http://www.cprogramming.com/tutorial/initialization-lists-c++.html:

"Before the body of the constructor is run, all of the constructors for its parent class and then for its fields are invoked. By default, the no-argument constructors are invoked. Initialization lists allow you to choose which constructor is called and what arguments that constructor receives.

If you have a reference or a const field, or if one of the classes used does not have a default constructor, you must use an initialization list."

2) Overloading Function and Copy Constructor

http://www.learncpp.com/cpp-tutorial/911-the-copy-constructor-and-overloading-the-assignment-operator/

3) Virtual Constructor Function

See Eckel "Thinking in C++":

"If you want to be able to call a virtual function inside the constructor and have it do the right thing, you must use a technique to simulate a virtual constructor. This is a conundrum. Remember, the idea of a virtual function is that you send a message to an object and let the object figure out the right thing to do. But a constructor builds an object. So a virtual constructor would be like saying, “I don’t know exactly what kind of object you are, but build the right type anyway.” In an ordinary constructor, the compiler must know which VTABLE address to bind to the VPTR, and even if it existed, a virtual constructor couldn’t do this because it doesn’t know all the type information at compile time. It makes sense that a constructor can’t be virtual because it is the one function that absolutely must know everything about the type of the object.

Why we need virtual destructor is exactly because of polymorphism. Consider if we have BaseClass and ChildClass. If we do this:

BaseClass *p = new ChildClass;

delete p;

then we fail to delete the ChildClass object! Although the pointer of the BaseClass can point to an object of the ChildClass, the command delete could only get rid of a BaseClass object - unless it has a virtual destructor!

http://blogs.msdn.com/b/oldnewthing/archive/2004/05/07/127826.aspx

5) Avoiding changing value when passing by reference

Use the technique of passing by const reference (http://www.learncpp.com/cpp-tutorial/73-passing-arguments-by-reference):

"One of the major disadvantages of pass by value is that all arguments passed by value are copied to the parameters. When the arguments are large structs or classes, this can take a lot of time. References provide a way to avoid this penalty. When an argument is passed by reference, a reference is created to the actual argument (which takes minimal time) and no copying of values takes place. This allows us to pass large structs and classes with a minimum performance penalty.

http://www.learncpp.com/cpp-tutorial/101-constructor-initialization-lists/

Also, http://www.cprogramming.com/tutorial/initialization-lists-c++.html:

"Before the body of the constructor is run, all of the constructors for its parent class and then for its fields are invoked. By default, the no-argument constructors are invoked. Initialization lists allow you to choose which constructor is called and what arguments that constructor receives.

If you have a reference or a const field, or if one of the classes used does not have a default constructor, you must use an initialization list."

2) Overloading Function and Copy Constructor

http://www.learncpp.com/cpp-tutorial/911-the-copy-constructor-and-overloading-the-assignment-operator/

3) Virtual Constructor Function

See Eckel "Thinking in C++":

"If you want to be able to call a virtual function inside the constructor and have it do the right thing, you must use a technique to simulate a virtual constructor. This is a conundrum. Remember, the idea of a virtual function is that you send a message to an object and let the object figure out the right thing to do. But a constructor builds an object. So a virtual constructor would be like saying, “I don’t know exactly what kind of object you are, but build the right type anyway.” In an ordinary constructor, the compiler must know which VTABLE address to bind to the VPTR, and even if it existed, a virtual constructor couldn’t do this because it doesn’t know all the type information at compile time. It makes sense that a constructor can’t be virtual because it is the one function that absolutely must know everything about the type of the object.

And yet there are times when you want something approximating the behavior of a virtual constructor..."

4) Virtual DestructorWhy we need virtual destructor is exactly because of polymorphism. Consider if we have BaseClass and ChildClass. If we do this:

BaseClass *p = new ChildClass;

delete p;

then we fail to delete the ChildClass object! Although the pointer of the BaseClass can point to an object of the ChildClass, the command delete could only get rid of a BaseClass object - unless it has a virtual destructor!

http://blogs.msdn.com/b/oldnewthing/archive/2004/05/07/127826.aspx

5) Avoiding changing value when passing by reference

Use the technique of passing by const reference (http://www.learncpp.com/cpp-tutorial/73-passing-arguments-by-reference):

"One of the major disadvantages of pass by value is that all arguments passed by value are copied to the parameters. When the arguments are large structs or classes, this can take a lot of time. References provide a way to avoid this penalty. When an argument is passed by reference, a reference is created to the actual argument (which takes minimal time) and no copying of values takes place. This allows us to pass large structs and classes with a minimum performance penalty.

However, this also opens us up to potential trouble. References allow the function to change the value of the argument, which in many cases is undesirable. If we know that a function should not change the value of an argument, but don’t want to pass by value, the best solution is to pass by const reference."

cf. http://iagtm.blogspot.com/2010/02/c-function-passing-by.html

Quant Interview Questions (Model Validation 2)

1) Consider the SDE dr = a r dt + b dW. What is the solution for r?

Ans:

This is a special case of O-U process. Try taking derivative of $ f \equiv r_t e^{-at} $

2) Toss a fair coin for 100 times. What is (approximately) the probability Pr(# of H >= 60)?

Ans:

Consider the binomial distribution

n C k*p^k*(1-p)^(n-k)

The mean of the distribution is n*p and the variance is n*p*(1-p). When n is large, the binomial distribution converges to normal distribution with mean = n*p and variance = n*p*(1-p). Hence the standard deviation in this case is 5, and H >= 60 would be two standard deviation away from the mean. The answer is therefore (1 - 95.4%)/2 = 2.3%.

3) What is copula and how can it be used for modeling correlated processes?

Ans:

Let F_X and G_Y be the cdf of random variables X and Y, and they are the marginal distribution of the bivariate distribution H_XY. Using the fact that U = F_X(X) and V = G_Y(Y) both have uniform distribution from 0 to 1 (because F_X(X) is the percentile function). Sklar's Theorem states that there exists a copula function C such that

H_XY = C(F_X, G_Y)

In other words, we can construct the joint distribution using the marginal distributions.

Reference: http://en.wikipedia.org/wiki/Copula_%28statistics%29

4) Why is holding the equity piece of a CDO longing default correlation?

Ans:

Holding the senior tranche is a short position in correlation - that is easy to understand, because the probability of loss for the senior tranche is low as long as not too many assets in the pool default together in a bad credit environment. However, for the equity piece it seems that it will not actively benefit from high correlation. The way to think of it is to consider when credit environment is good, a high correlation ensures that no asset in the pool goes default.

5) Suppose x is a continuous observable variable, Y* is a binary observable variable, and Y is a latent variable. The following relations hold:

Y = b x + e, e ~ N(0,1)

Y* = 1 (if Y >= 0) or 0 (if Y < 0)

Suppose we have a set of data (x, Y*). How can be calibrate the model (i.e. fit b)?

Ans:

This problem can be solved by MLE. Since e ~ N(0,1), we have Y ~ N(b x, 1). The probability of Y* = 1 (or equivalently Y >= 0) is just the cumulative normal probability density. For each of the data point (x_i, Y*_i) we have such an F(Y). Thus the likelihood function is

Product(F_i(Y))

and we fit b by maximizing this function.

6) A, B and C are playing a game with gemstones. Each of them starts with 5 stones with different colors (blue, red, green, yellow, white). There are 3 rounds in the game. In the first round, A pick one stone randomly from B and one from C; in the second round B pick one stone randomly from C and one from A; in the third round C pick one stone randomly from A and one from B. What is the probability that at the end of the game each person would have 5 stones with different colors?

Ans:

0.015873 (MC verification)

Edit: Thanks to M Millar, the right answer should be 0.0413. The tree looks like

-- C gets the right stones (1/9)

-- B takes original from A (3/7) --

| -- C fails (dead)

-- A draws same color (1/5) --

| -- B fails to take original from A (dead)

--

| -- B takes original from A (2/7) -- C gets the right stones (1/9)

| |

| | -- B takes the right color from C (1/4) -- C gets the right stones (1/9)

-- A draws diff colors (4/5) ---- B takes the other duplicated color from A (2/7) --

| -- B takes the wrong color from C (dead)

-- B removes one of the three lone colors (dead)

See here for more interview questions/brainteasers

Ans:

This is a special case of O-U process. Try taking derivative of $ f \equiv r_t e^{-at} $

2) Toss a fair coin for 100 times. What is (approximately) the probability Pr(# of H >= 60)?

Ans:

Consider the binomial distribution

n C k*p^k*(1-p)^(n-k)

The mean of the distribution is n*p and the variance is n*p*(1-p). When n is large, the binomial distribution converges to normal distribution with mean = n*p and variance = n*p*(1-p). Hence the standard deviation in this case is 5, and H >= 60 would be two standard deviation away from the mean. The answer is therefore (1 - 95.4%)/2 = 2.3%.

3) What is copula and how can it be used for modeling correlated processes?

Ans:

Let F_X and G_Y be the cdf of random variables X and Y, and they are the marginal distribution of the bivariate distribution H_XY. Using the fact that U = F_X(X) and V = G_Y(Y) both have uniform distribution from 0 to 1 (because F_X(X) is the percentile function). Sklar's Theorem states that there exists a copula function C such that

H_XY = C(F_X, G_Y)

In other words, we can construct the joint distribution using the marginal distributions.

Reference: http://en.wikipedia.org/wiki/Copula_%28statistics%29

4) Why is holding the equity piece of a CDO longing default correlation?

Ans:

Holding the senior tranche is a short position in correlation - that is easy to understand, because the probability of loss for the senior tranche is low as long as not too many assets in the pool default together in a bad credit environment. However, for the equity piece it seems that it will not actively benefit from high correlation. The way to think of it is to consider when credit environment is good, a high correlation ensures that no asset in the pool goes default.

5) Suppose x is a continuous observable variable, Y* is a binary observable variable, and Y is a latent variable. The following relations hold:

Y = b x + e, e ~ N(0,1)

Y* = 1 (if Y >= 0) or 0 (if Y < 0)

Suppose we have a set of data (x, Y*). How can be calibrate the model (i.e. fit b)?

Ans:

This problem can be solved by MLE. Since e ~ N(0,1), we have Y ~ N(b x, 1). The probability of Y* = 1 (or equivalently Y >= 0) is just the cumulative normal probability density. For each of the data point (x_i, Y*_i) we have such an F(Y). Thus the likelihood function is

Product(F_i(Y))

and we fit b by maximizing this function.

6) A, B and C are playing a game with gemstones. Each of them starts with 5 stones with different colors (blue, red, green, yellow, white). There are 3 rounds in the game. In the first round, A pick one stone randomly from B and one from C; in the second round B pick one stone randomly from C and one from A; in the third round C pick one stone randomly from A and one from B. What is the probability that at the end of the game each person would have 5 stones with different colors?

Ans:

Edit: Thanks to M Millar, the right answer should be 0.0413. The tree looks like

-- C gets the right stones (1/9)

-- B takes original from A (3/7) --

| -- C fails (dead)

-- A draws same color (1/5) --

| -- B fails to take original from A (dead)

--

| -- B takes original from A (2/7) -- C gets the right stones (1/9)

| |

| | -- B takes the right color from C (1/4) -- C gets the right stones (1/9)

-- A draws diff colors (4/5) ---- B takes the other duplicated color from A (2/7) --

| -- B takes the wrong color from C (dead)

-- B removes one of the three lone colors (dead)

See here for more interview questions/brainteasers

Labels:

coin tossing,

credit,

interview,

statistics,

stochastic calculus,

wishlist

Thursday, June 9, 2011

Quant Interview Questions (Interest Rate Strat.)

1)

Suppose you have a fair coin. How do you create an event A such that Pr(A) = 1/3?

Ans:

Toss the coin twice to produce the following space: {HH, HT, TH, TT}. Discard one of the element in the set. Each of the remaining outcome represents an event A.

Note: This recipe can be extended to producing an event with probability m/n, m and n being integers.

2)

Suppose you have a fair coin. How do you create an event A such that Pr(A) = r, r being an irrational number between 0 and 1?

Ans:

Translate r into a binary string. For example, 0.27314... would be {10}{0111}{011}{01}{100}... We start tossing the coin until we can tell if the string formed by coin tossing is greater/less than r in binary, with H = 1 and T = 0. For example, the first step is to toss twice. If we have {TH} = {01}, then the string formed by coin tossing must be less than r in binary. We terminate and conclude that the event belongs to A; If we have {HH} = {11}, then the string formed by coin tossing must be greater than r in binary. We terminate and conclude that the event does not belong to A. If the two tosses produce {HT} = {10} then we cannot conclude, and have to move on to the next digit {111}, and so on. (MC verification)

Note: We have to append a zero in front of {111}, {11} and {1} because otherwise we cannot create a greater binary, i.e. 111 is the greatest 3-digit binary number.

See here for more interview questions/brainteasers

Suppose you have a fair coin. How do you create an event A such that Pr(A) = 1/3?

Ans:

Toss the coin twice to produce the following space: {HH, HT, TH, TT}. Discard one of the element in the set. Each of the remaining outcome represents an event A.

Note: This recipe can be extended to producing an event with probability m/n, m and n being integers.

2)

Suppose you have a fair coin. How do you create an event A such that Pr(A) = r, r being an irrational number between 0 and 1?

Ans:

Translate r into a binary string. For example, 0.27314... would be {10}{0111}{011}{01}{100}... We start tossing the coin until we can tell if the string formed by coin tossing is greater/less than r in binary, with H = 1 and T = 0. For example, the first step is to toss twice. If we have {TH} = {01}, then the string formed by coin tossing must be less than r in binary. We terminate and conclude that the event belongs to A; If we have {HH} = {11}, then the string formed by coin tossing must be greater than r in binary. We terminate and conclude that the event does not belong to A. If the two tosses produce {HT} = {10} then we cannot conclude, and have to move on to the next digit {111}, and so on. (MC verification)

Note: We have to append a zero in front of {111}, {11} and {1} because otherwise we cannot create a greater binary, i.e. 111 is the greatest 3-digit binary number.

See here for more interview questions/brainteasers

Wednesday, June 8, 2011

Quant Interview Questions (Model Validation)

1) Suppose Prob(Tail) = p. What is the probability distribution of N, where N is the event:

The first Head shows up at the N-th toss.

Ans:

The (discrete) probability density is simply

Pr(event) = p^(N-1) (1-p)

What is the expectation value of N?

Ans:

E[N] = Sum_(i=1)^(inf) i*p^(i-1) (1-p)

How to do this sum? Trick:

i*p^(i-1) = (d/dp)p^i

Using this and exchanging the order of summation and differentiation, we get

E[N] = 1/(1-p)

2) Suppose X and Y are two random variables with the same cdf, X, Y > 0 and E[X/Y] = 1. Show that E[X] = E[Y].

Ans:

Cauchy-Schwarz inequality, but how?

See here for more interview questions/brainteasers

The first Head shows up at the N-th toss.

Ans:

The (discrete) probability density is simply

Pr(event) = p^(N-1) (1-p)

What is the expectation value of N?

Ans:

E[N] = Sum_(i=1)^(inf) i*p^(i-1) (1-p)

How to do this sum? Trick:

i*p^(i-1) = (d/dp)p^i

Using this and exchanging the order of summation and differentiation, we get

E[N] = 1/(1-p)

2) Suppose X and Y are two random variables with the same cdf, X, Y > 0 and E[X/Y] = 1. Show that E[X] = E[Y].

Ans:

Cauchy-Schwarz inequality, but how?

See here for more interview questions/brainteasers

Monday, June 6, 2011

About Stochastic and Not-So-Stochastic Integrals

Here we consider 3 kinds of integrals. Suppose W is a Brownian motion, f is a stochastic function and g is a deterministic function.

1. $ \int_t^T W_s ds $

This entity turns out to be a normally distributed random variable, distributed as N(0, $ T^3 /3 $). [Missing: proof , can be found in Crack's HOTS pp. 154]

2. $ \int_t^T g dW_s $

This is not only a martingale, but also a normally distributed random variable N(0,$ \int_t^T g^2 ds $).

3. $ \int_t^T f dW_s $

This is a martingale, but the distribution is not (necessarily) normal.

One implication of 2. versus 3. is this.

1. $ \int_t^T W_s ds $

This entity turns out to be a normally distributed random variable, distributed as N(0, $ T^3 /3 $). [Missing: proof , can be found in Crack's HOTS pp. 154]

2. $ \int_t^T g dW_s $

This is not only a martingale, but also a normally distributed random variable N(0,$ \int_t^T g^2 ds $).

3. $ \int_t^T f dW_s $

This is a martingale, but the distribution is not (necessarily) normal.

One implication of 2. versus 3. is this.

Friday, June 3, 2011

Ito Calculus Revisited

Most General Case

In d-dimensions, Ito's Lemma reads

Note that, unlike in the specific case below, the dynamics of the process X is NOT specified.

Note that, unlike in the specific case below, the dynamics of the process X is NOT specified.

Specific Case

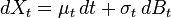

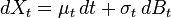

In one-dimension and assuming Ito's Lemma reads

Ito's Lemma reads

Notes: Partial f partial t comes from the fact the f is defined as f(t,X). cf. the general case above, where f=f(X). This so-called one-dimensional case is in fact 2-dimensional, with one of the dimension being deterministic (time).

Notes: Partial f partial t comes from the fact the f is defined as f(t,X). cf. the general case above, where f=f(X). This so-called one-dimensional case is in fact 2-dimensional, with one of the dimension being deterministic (time).

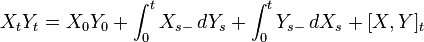

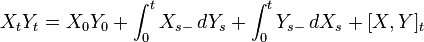

Ito's Product Rule

Suppose X and Y are processes driven by the same Brownian motion. Then

where d[X,Y]=dXdY.

where d[X,Y]=dXdY.

Note: This can be easily derived by considering a 2-dimensional Ito's Lemma, where f(X,Y)=XY.

Source of formulae images:

http://en.wikipedia.org/wiki/It%C5%8D_calculus

http://en.wikipedia.org/wiki/It%C5%8D%27s_lemma

In d-dimensions, Ito's Lemma reads

Note that, unlike in the specific case below, the dynamics of the process X is NOT specified.

Note that, unlike in the specific case below, the dynamics of the process X is NOT specified.Specific Case

In one-dimension and assuming

Ito's Lemma reads

Ito's Lemma reads Notes: Partial f partial t comes from the fact the f is defined as f(t,X). cf. the general case above, where f=f(X). This so-called one-dimensional case is in fact 2-dimensional, with one of the dimension being deterministic (time).

Notes: Partial f partial t comes from the fact the f is defined as f(t,X). cf. the general case above, where f=f(X). This so-called one-dimensional case is in fact 2-dimensional, with one of the dimension being deterministic (time).Ito's Product Rule

Suppose X and Y are processes driven by the same Brownian motion. Then

where d[X,Y]=dXdY.

where d[X,Y]=dXdY.Note: This can be easily derived by considering a 2-dimensional Ito's Lemma, where f(X,Y)=XY.

Source of formulae images:

http://en.wikipedia.org/wiki/It%C5%8D_calculus

http://en.wikipedia.org/wiki/It%C5%8D%27s_lemma

Wednesday, June 1, 2011

Structural vs Reduced-Form Models (Credit)

Structural Models:

Considers default as being driven by the firm's assets.

Example: Merton

Note: Structural Models produce poor bond prices, but OK to use them to calculate PD.

Reduced-Form Models:

Proposes a relationship between bond price/yield and default risk. Default risk proxied by hazard rate. Then use market price to back out the hazard rate (hazard rate term structure fitting).

Example: Duffie and Singleton (1999)

Considers default as being driven by the firm's assets.

Example: Merton

Note: Structural Models produce poor bond prices, but OK to use them to calculate PD.

Reduced-Form Models:

Proposes a relationship between bond price/yield and default risk. Default risk proxied by hazard rate. Then use market price to back out the hazard rate (hazard rate term structure fitting).

Example: Duffie and Singleton (1999)

Structural vs Reduced-Form Models (ABS)

Structural Models:

Treats prepayment as a (American) call option of the mortgage. Tries to solve for the optimal exercise strategy. Focuses on why loans terminate. Needs full information set for calibration (i.e., not just market prices). Computationally too expensive to be practical. Some primitive Structural Models do not allow for mortgages to exceed par.

Example: Dunn and McConnell (1981)

Reduced-Form Models:

Focuses on how (or, when) loans terminate. Needs only partial information set for calibration. Poor out-of-sample performance. Often are hazard models.

Example: Schwartz and Torous (1989)

Treats prepayment as a (American) call option of the mortgage. Tries to solve for the optimal exercise strategy. Focuses on why loans terminate. Needs full information set for calibration (i.e., not just market prices). Computationally too expensive to be practical. Some primitive Structural Models do not allow for mortgages to exceed par.

Example: Dunn and McConnell (1981)

Reduced-Form Models:

Focuses on how (or, when) loans terminate. Needs only partial information set for calibration. Poor out-of-sample performance. Often are hazard models.

Example: Schwartz and Torous (1989)

Subscribe to:

Posts (Atom)